|

Theoretical cognitive neuroscience labM. W. Howard, Principal Investigator |

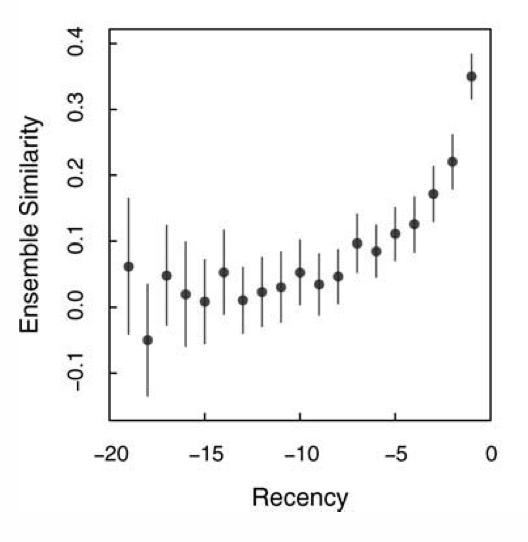

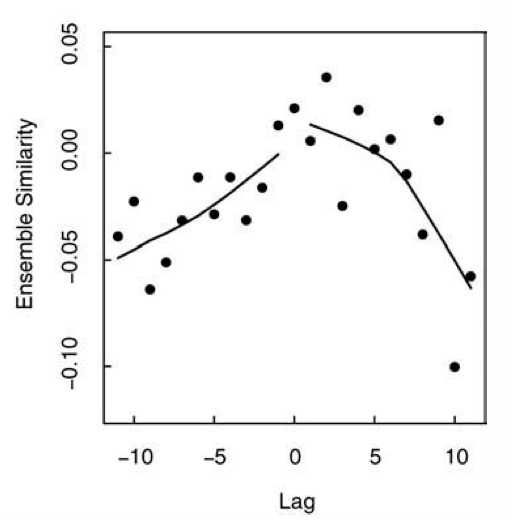

Our experience of the passage of time is intimately entwined with memory. The passage of time causes two dramatic effects in episodic memory experiments. The recency effect refers to the finding that, all other things being equal, memory for more recent events is better than memory for less recent events. The contiguity effect refers to the finding that when one remembers an event, events that were experienced close together in time also come to mind. Both of these effects persist over both long and short time scales. Both phenomena can be explained if one assumes that the cue for memory is a gradually-changing state of temporal context. In this view, the recency effect results because the state of temporal context at any moment resembles the encoding context of stimuli experienced a short time ago more than it resembles the encoding context of stimuli experienced further in the past. The contiguity effect is predicted by these models if memory for a stimulus causes a ``jump back in time'' to the state of temporal context when that stimulus was previously-experienced. Both of these phenomena---a gradually-changing memory state and a jump-back-in-time caused by repeated stimuli have been observed in the human brain (below, left, Howard et al., 2012, see also Manning et al., 2011). This raises the question of what mathematical form governs the change in temporal context from one moment to the next. We have developed a specific mathematical form for temporal context (Shankar & Howard, 2012) that is scale-free and contains temporal information about the time at which stimuli were experienced. This form enables an account of a broad variety of cognitive phenomena from episodic memory, interval timing, and conditioning (see also Howard et al., submitted). Recent evidence from colleagues in the BU Center for Memory & Brain have recorded from neurons in the rodent MTL that appear to show the signature of this mathematical hypothesis (below, right; MacDonald et al., 2011).

|

|

|

Neural recency and contiguity effects. Ensembles of human MTL neurons were measured during a continuous recognition task. The ensemble state changed gradually over tens of seconds (left). When a stimulus was repeated, the input resembled the neighbors of the original presentation in both the forward and backward directions (right). Critically, the repeated item caused a ``jump back in time.'' See Howard, Viskontas, Shankar and Fried (2012) for details. |

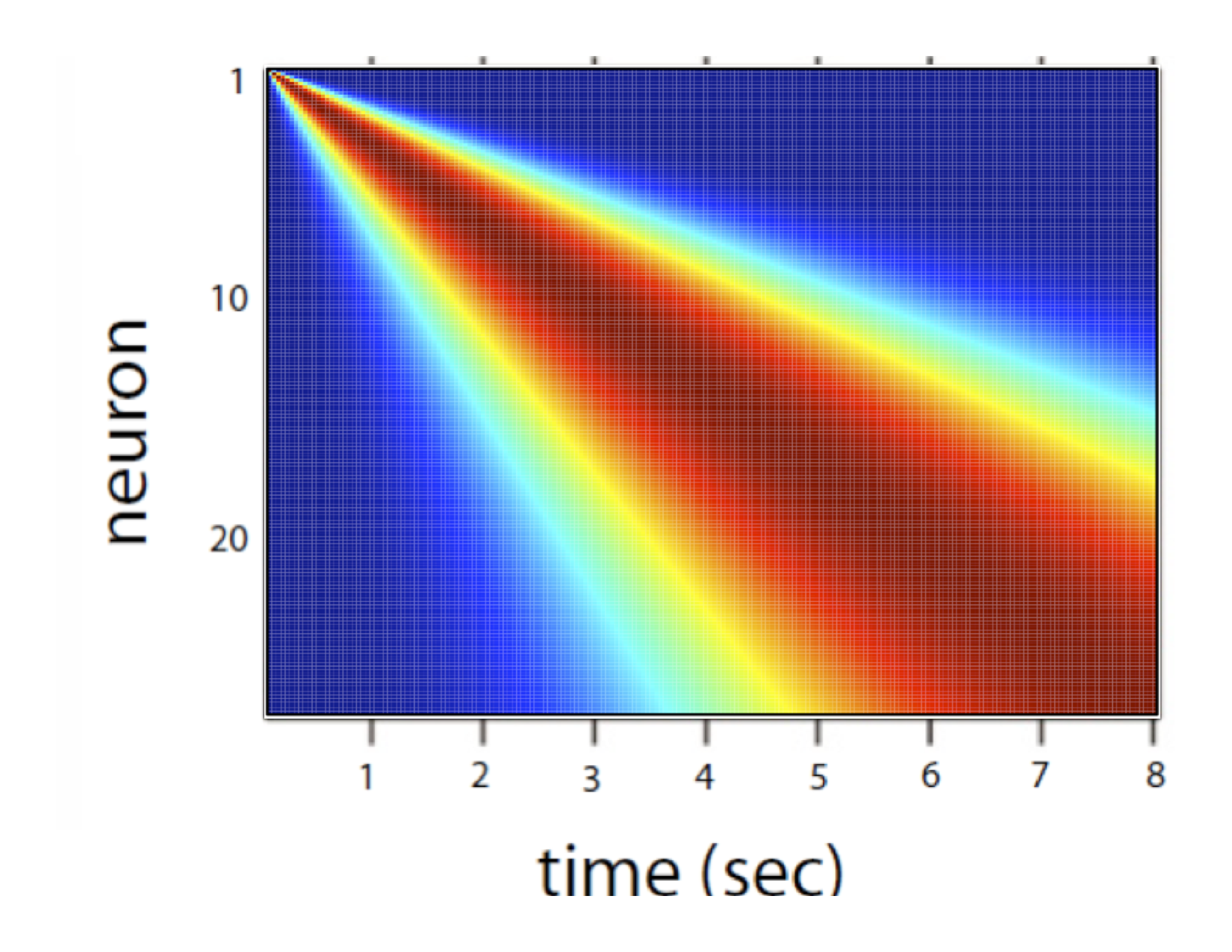

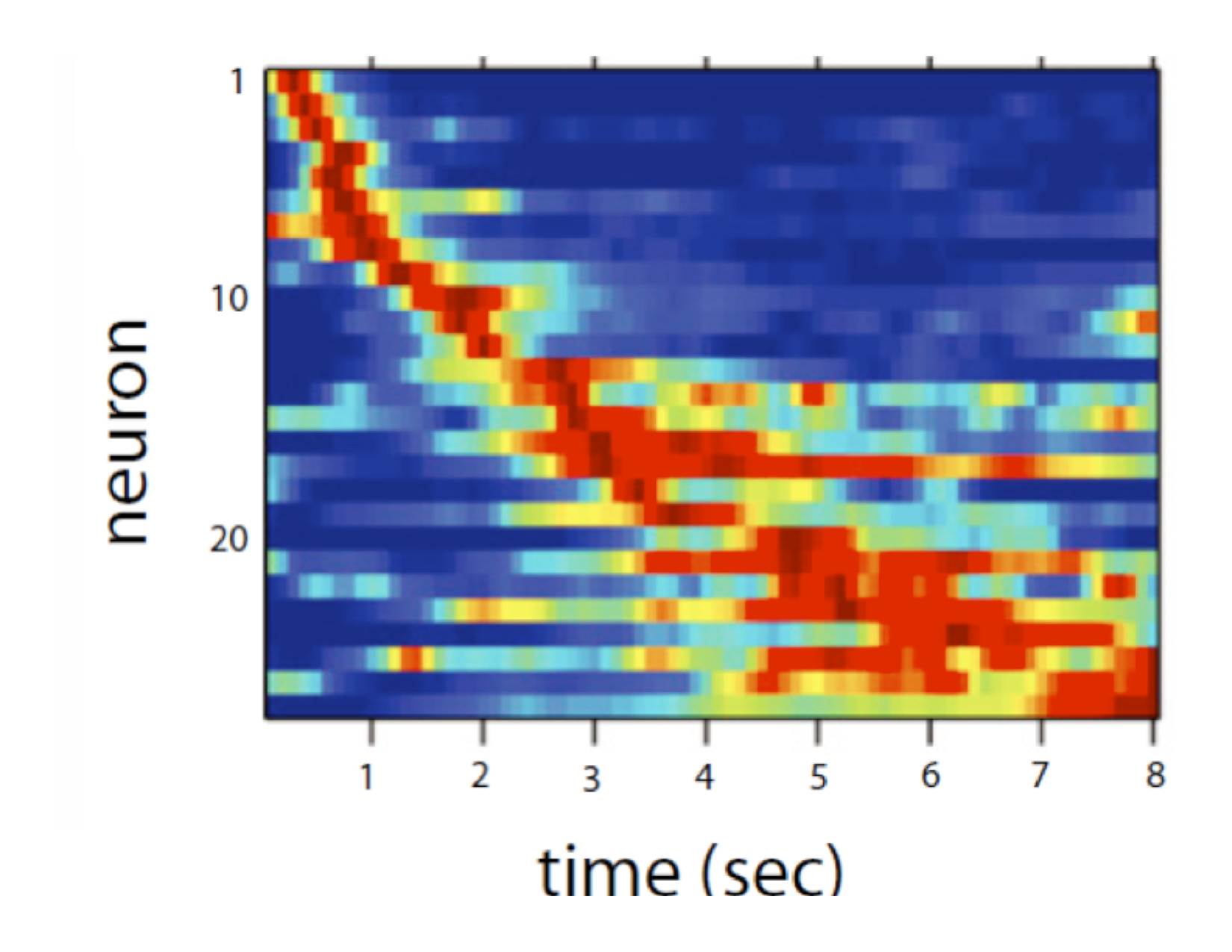

A distributed representation of temporal history. The firing rate of a set of simulated (left) and real (right) neurons is shown as a function of time. Each cell is a row; hot colors indicate a higher firing rate. Left: The mathematical model predicts that neurons coding for temporal context should respond to their preferred stimulus not immediately, but after some time has passed. The temporal spread of a cell's firing should go up linearly with the delay at which it responds. Right: Simultaneously-recorded neurons in the dorsal hippocampus during the delay period of a non-spatial memory task. Cells are sorted by the time of their peak activity. Every neuron that fired reliably during the delay period is shown. After MacDonald et al., (2011). |

In order to develop physical models of cognition, it is not sufficient to have an elegant theory that describes cognitive performance. One must also account for the firing of neurons in the brain that support that cognition. At the cognitive level, the medial temporal lobe (MTL) is essential for episodic memory, which is hypothesized to reflect a jump back to a prior state of temporal history. The firing of neurons in the MTL has been extensively studied in rats while they manuever around 2-dimensional spatial environments. Neurons in the MTL fire preferentially when the animal is in one particular region of the environment (e.g., O'Keefe & Burgess, 1996; Wilson & McNaughton, 1993). These ``place cells'' could be used to construct an estimate of the animal's current position. What does the representation of position have to do with temporal context? The mathematics of our hypothesis suggests an answer. The representation of temporal context codes the time since a past stimulus was experienced. By modulating the representation by the animal's current velocity, it is possible to use the same computation to code for the net distance traveled since a stimulus was experienced. In this way, the same computational framework can be used to code for both temporal and spatial context. We are working to elaborate these ideas into a detailed neurophysiological model of spatial firing correlates in the MTL. This line of work is supported by AFOSR.

|

|

||

Spatial firing correlates predicted by the representation of temporal history when modulated by velocity. Left, animals moved around environments of various shapes. Center, ``boundary vector cells'' respond when the animal is a certain distance from the boundary. Right, simulated cell whose preferred stimulus is a boundary with a tangent that faces east. This simulated cell responds when its preferred stimulus is a certain distance away. Data taken from Lever et al., 2009. The simulation was generated by Qian Du while she was a rotation student in our lab. |

The function of memory is not simply to remember the past, but also to predict the future so that the animal can behave adaptively. We can understand statistical learning of sequentially organized stimuli, such as language, as a reflection of prediction. Mathematically, the representation of temporal history can be utilized to predict future stimuli. In this model, each potential future stimulus is predicted to the extent that its encoding states matches the current state of the temporal history. By manipulating the structure of the learning experience, we can measure the degree to which human subjects predict different stimuli in statistical learning experiments, enabling us to infer properties of the hypothesized temporal representation. We can treat language learning as a very large statistical learning problem. Consider the novel word in the sentence ``The baker reached into the oven and pulled out the FLOOB.'' Here, the reader estimates the meaning of the word FLOOB in terms of the words that would fit into that slot, i.e., the other words predicted when FLOOB was experienced. A computational model that exploits this idea using older variants of temporal context does well in estimating the meaning of words after training on natural text (Howard et al., 2011, Shankar et al., 2009). The same approach is tractable using the representation of temporal history and should result in dramatically improved performance. This line of work is supported by NSF.