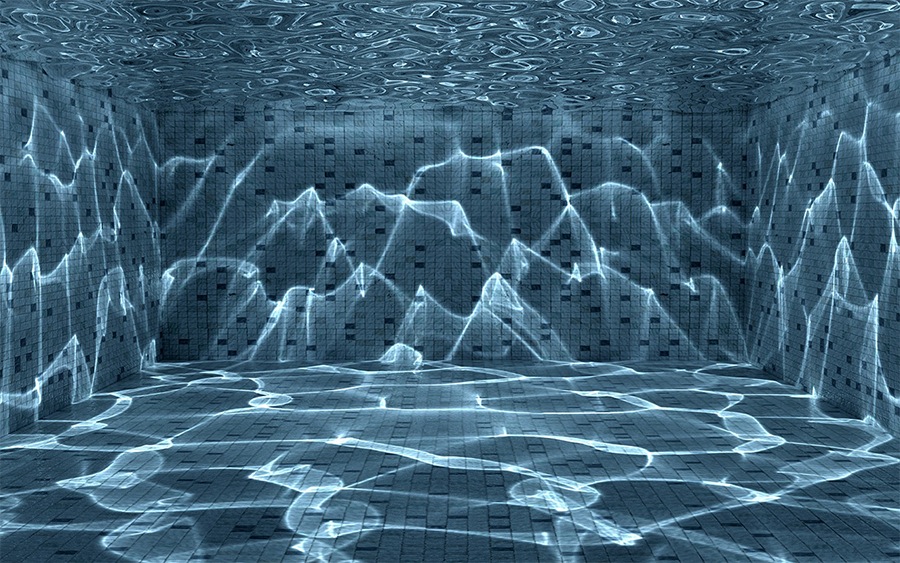

The first stage of the algorithm resembles filtering that occurs in the retina, thalamus and primary layers of cortex. The next step derives from the observation that different neurons respond to distinct parts of an image at slightly different times. If these temporal variations are translated into spatial dislocations, then uniform fields are distorted, planes acquire curvature, and fractures arise. (A physical analogy for this process can be found in the underwater light caustics created by waves on the surface of a swimming pool.) The process is related to new algorithms for time-frequency analysis.